Page 2

MC- The Uncharted series is known for pushing the boundaries of what the MC- How much of the data that was captured ended making it to the final game? JS- It's hard to put an exact number on it. All of the cinematics started as motion capture – it's the first step in the process – so that's 90 minutes worth right there. Then you have all of the IGCs (in-game cinematics), the scripted sequences that play during gameplay, and all of the gameplay animation. We certainly captured dozens of hours of stuff just for the cinematics alone, but only a certain amount made it into the game. For the gameplay animation, a lot of it is straight-up keyframe animation, and the stuff that started as mocap got so heavily tweaked that the original data isn't recognizable.

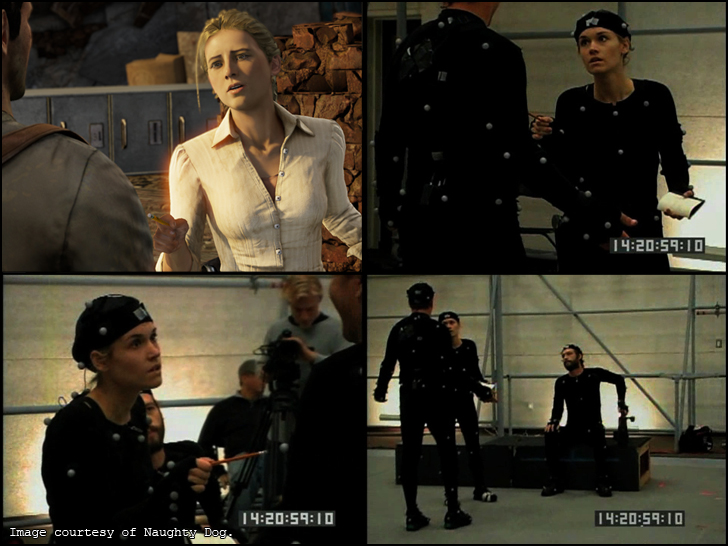

MC- Finding the right performers is one of the most important elements for a successful motion capture project. What where the most important things you looked for during the casting process? JS- Well, we start with the obvious things – e.g. talent, distinctive voice, whether an actor is appropriate for the role, etcetera. But since we're looking for people who will be doing both the mocap acting and the voice, we also watch how the candidates physicalize their performance. It's also important to see how they deal with adjustments and to see if they take direction well. For the top candidates, we'll actually bring them back for a second audition and have them perform a scene with Nolan (North, who plays Drake) to make sure they have good chemistry. MC- Did you guys used different actors for the dramatic sequences than for the action driven sections? JS- The only time we swapped out performers was when we did stunt work. Obviously, we don't want to permanently injure our actors, so for anything even remotely dangerous, we'd bring in some stunt performers. This was mostly done for the gameplay animation, the melee fighting in particular. If there was anything that could use a stunt performer in the cinematics, 90% of the time we just did keyframe animation. Sure, we could've rigged up a bunch of wires and had people flying through the air, but that stuff ends up needing a lot of animation adjustments anyway, so why not just keyframe it?

MC- Can you talk about the software and tools used through the Naughty Dog motion capture pipeline? JS- All of our motion capture data was solved and processed by our mocap vendor, House of Moves. Our animators work exclusively with Maya, so House of Moves sends us the data in Maya format, all FK on the arms and legs. We then transfer the data to our character rigs, which were designed to have three skeleton layers. The bottom-most layer is the game skeleton, which is what our game engine reads to translate the data into the game. We never touch that skeleton. Driving that skeleton is what we call the mocap skeleton; all the mocap data gets applied to this layer. Finally, driving that skeleton is the animation rig, which can be used as an offset rig. We've also got custom-made tools that allow us to "flatten" our offset animation – i.e. bake our offsets back onto the mocap rig – or "trace" the mocap animation. We can extract the keyframes we want from the original mocap, then detach the animation rig from the mocap skeleton and do keyframe animation. The facial animation is all joint-based and controlled by a series of sliders, one layer for the broad motions, one layer for the fine details. We can also control the individual joints on the face, but the only time we do that is for super-special-case poses, like Sully holding the cigar in his mouth.

MC- What comes next in the motion capture world for Naughty dog? JS- On a basic level, we'll continue to refine our process and tools to get the best possible results within our limited schedules. Beyond that, nothing I can really talk about at this point, I'm afraid. :)

|

||

|

||